Let's make streaming analytics boring

Streaming analytics has developed a stigma as a technology that has long promised to revolutionize data processing, but never quite delivered on this promise. Indeed, more than a decade after the introduction of Apache Flink, most users find streaming analytics tools difficult to use, restrictive, and unreliable. These discussions are also reflected within the streaming community itself, with some worried about the future of the technology, and some even advising against starting new companies in this space.

Let me start with stating the obvious: streaming analytics is the only way to address the limitations of traditional batch analytics when it comes to processing changing data with low latency. In the world where businesses need to make decisions based on the latest data, daily reports generated by batch tools just don't cut it anymore. If there is one thing I learned from talking to numerous companies over the last year, it is that the demand for streaming analytics is enormous. What is missing is a technology capable of satisfying this demand.

Working back from the ideal user experience

The other day at the playground my three-year-old was playing tag with a friend, while I was chatting with her dad. As these things go, I tried explaining what I do for a living, but my explanation of streaming analytics didn't land, until I mentioned SQL. This is when his eyes lit up: "I write a lot of SQL at work. My manager always asks for complicated reports, so I use SQL to generate them." He works in supply chain management and has no engineering background.

To me, this is the true triumph of modern batch analytics: with a few lines of SQL anyone can do complex processing over vast arrays of data, without having to understand how the database works internally. I believe we should strive for the same kind of user experience for streaming analytics.

It's tempting to declare that streaming is fundamentally harder than batch processing, so we cannot hope to achieve the same level of ergonomics. I don't subscribe to this point of view. There's a lot we can do to narrow the gap. Let's start by breaking the problem into two broad areas.

- Operational experience implementing and maintaining streaming pipelines within an organization.

- End-user experience writing and executing queries.

Operational experience

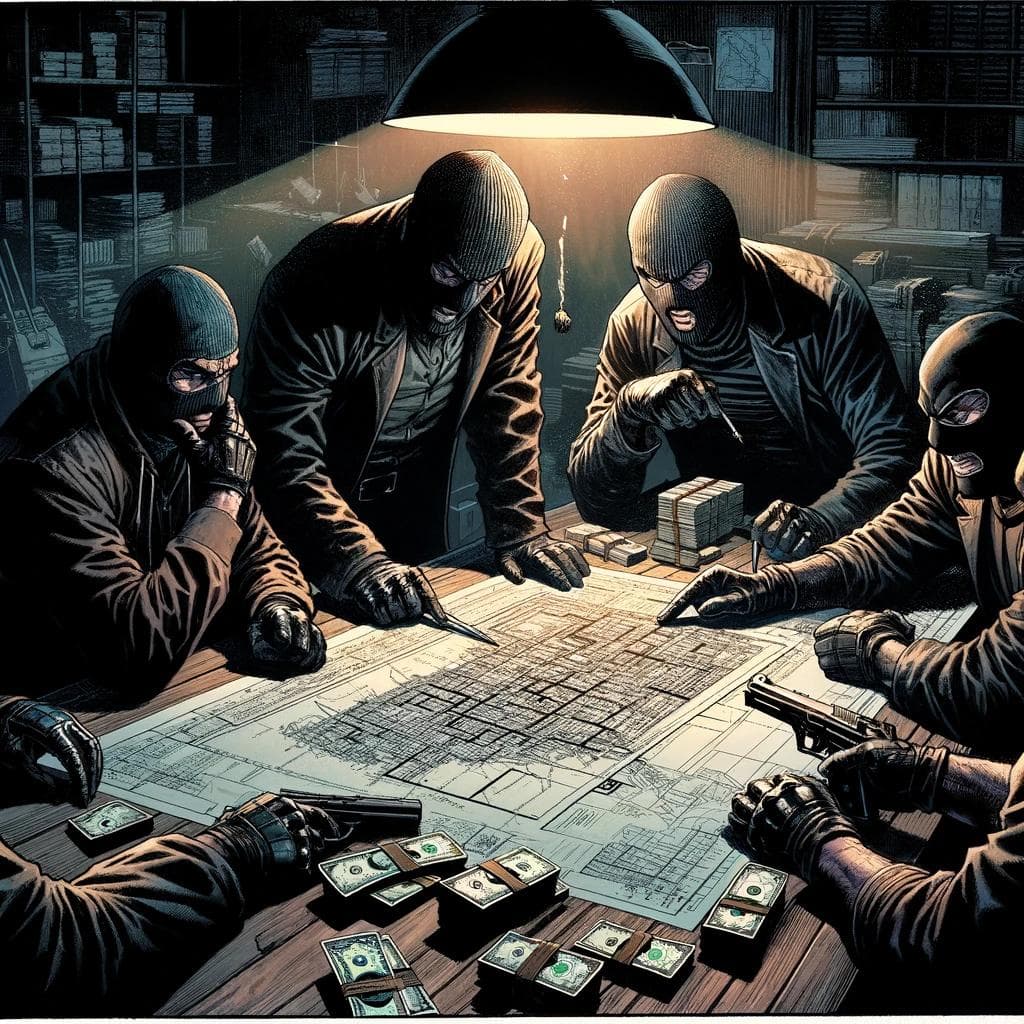

Modern data warehouses are built to deliver rock-solid data integrity, durability, and ease of operation. You have to mess up pretty badly to lose data with one of those. Operating such a warehouse is as boring and reliable as banking in Switzerland. In contrast, building a streaming analytics pipeline today feels more like planning a bank heist: exciting, but highly dangerous, and probably not worth the risk.

Here is a typical streaming architecture today. Input data is ingested from an OLTP database using a CDC tool like Debezium and streamed to Kafka. The streaming analytics engine reads data from Kafka, computes new outputs and writes them to the data warehouse, again via Kafka.

On the surface, it all makes sense. Streaming analytics operates on streaming data, so it's only logical that it should read inputs from and write outputs to a streaming platform like Kafka. In reality, there are just too many problems with this architecture for it to be practical.

- Configuration hell. For starters, every color in the above diagram represents a separate administrative domain, managed by a separate team within the enterprise IT department. Streaming analytics not only introduces a new system in the mix, but also requires every other part of the pipeline to be configured and provisioned in a very specific way. To get a sense of the resulting configuration hell, check out any Debezium connector documentation page, in particular the lengthy section on how the source database must be configured to work with Debezium. Dare to change this configuration at runtime, and your streaming pipeline will break and probably lose data. The resulting complexity alone places streaming analytics beyond the reach of many small to medium size companies.

- Square pegs, round holes. Today's databases and data warehouses are not designed for streaming ingress or egress. Technologies like CDC and Snowpipe retrofit these systems with limited streaming capabilities, but without changing the way the database operates internally they only go so far. In my experience, these systems have important functional and non-functional limitations. Here are two examples: (1) enabling CDC support on a SQL Server dramatically reduces its throughput in processing transactional operations, (2) Snowpipe Streaming only supports insert operations, but not updates or deletes.

- Fault in-tolerance. For the streaming pipeline above to produce results reliably and correctly, it must solve the three-way state synchronization problem. That's right, not only the OLTP database on the left and the data warehouse on the right, but also the streaming analytics engine in the middle maintain state. It's too easy to think of it as a stateless bump in the wire, data streaming in and out without leaving a trace. This couldn't be farther from truth. At the most fundamental level, streaming analytics involves a memory-performance tradeoff: it updates query results on the fly by maintaining the memory of the previous computation.

If you've taken a distributed systems course, you know that consistency in a distributed system requires a common synchronization protocol supported by all its components. No such protocol exists in this case. In fact, every system in the diagram is a separate product, built and operated independently of all others. Even understanding the various failure modes in this architecture is a daunting task, let alone designing ways to recover from them.

- There's no such thing as a streaming-only workload. At least I am yet to encounter one of those. In reality, every practical system involves some mix of stream and batch processing. Often, this takes the form of backfill, where in order to produce correct results the streaming engine must ingest a range of historical data before processing streaming inputs. As another example, real-time feature engineering workloads require evaluating the same feature queries in batch mode for model training and in streaming mode for real-time inference. Implementing this type of hybrid operation in the above architecture requires complex orchestration across all its components, making it even more unwieldy.

With all this in mind, it's no surprise that only big players with high-value use cases can afford to operate streaming pipelines. To make all the pieces in today's streaming architecture work in unison, delivering robust performance and stability, is an extraodinary feat of engineering. For a technology to become mainstream, it shouldn't force users to live on the edge. Boring is good! So, how do we make streaming analytics boring?

How do we fix it?

One thing is clear: streaming analytics cannot be implemented as a bump in the wire connecting other systems. You cannot build a data processing system without fast and reliable access to data. In this respect, streaming analytics is no different from batch.

There are several ways to address this, including integrating streaming analytics into an existing data warehouse (i.e., moving compute to data) and building a dedicated datastore underneath the streaming engine (i.e., moving data to compute).

Personally, I believe that the future of streaming analytics lies with the Lakehouse architecture. The rise of the Lakehouse has in a few short years transformed the data landscape. And it's a welcome transformation for the streaming community. Two properties of the Lakehouse are particularly important to us:

- Decoupled compute and storage. Unlike data warehouses, a Lakehouse isn't bundled with a query engine. Instead, it is built around an open data format, allowing various query engines, like Spark SQL, to operate on it. A streaming query engine can be added to the mix without waiting for the Lakehouse developer to add support for it.

- Built-in support for batch and streaming. Lakehouses adopt log-structured storage formats, which support batch and streaming workloads equally well. A streaming engine running on a Lakehouse can take advantage of high-throughput, low-latency streaming input and output, while also performing bulk data loads for backfill.

Thus, an optimal streaming architecture for an organization using the Lakehouse as its storage platform would look like this:

This architecture simplifies dependencies across multiple administrative domains and eliminates the impedance mismatch between compute and storage layers. It provides access to both new and historical data, enabling batch computation and fault tolerance.

Let us zoom in on the righthand side of the diagram. The streaming query engine reads a stream of input changes from Lakehouse tables, computes updates to the queries, and writes them to a different set of tables.

Such tables, that store results of a query, are known as materialized views. And the process of updating views to reflect new data is known as incremental view maintenance. Thus, the Lakehouse-centric architecture not only eliminates the complex plumbing, but also places streaming analytics in the familiar context of a relational data store with tables and views. The remaining question is whether we can enable users to write streaming queries with the same ease as batch queries.

End-user experience

Back to my playground conversation, my fellow toddler dad was able to use SQL effectively without being a database expert. As long as he could framew his questions as SQL queries, they just worked. Achieving this simplicity for streaming analytics requires:

- Support for arbitrary SQL. Users should be able to write arbitrary SQL queries in a rich SQL dialect.

- Performance out of the box. Not all queries are created equal, but by and large users can rely on the engine to evaluate complex queries efficiently on large datasets without having to optimize the queries by hand.

- Well-defined semantics. Users must be able to understand and trust the output produced by the system.

While many existing systems support SQL queries on streaming data, none fully meet these requirements. With a few notable exceptions, they lack a strong semantic and algorithmic foundation. Simply put, they lack a clear specification of exactly what they compute, and how this computation is structured. While many types of software can be successfully built this way, database software is not one of them. This is precisely why we are so obsessed with building Feldera on the right foundation. But that's a topic for another blog post.

Conclusion

I believe that the future of streaming analytics is bright. There is a strong demand for the technology, but the technology itself must be ready to meet this demand. First, we need to package it in the right form factor. Streaming analytics cannot operate as a bump in the wire connecting other systems. One promising approach is to integrate it in the Lakehouse architecture as an incremental view maintenance engine.

Second, streaming query engines must strive toward the versatility, performance, and ease of use of modern batch systems. This is a difficult goal, and it may take us, as a community, some time to get there, but it's fundamentally achievable.